Artificial intelligence is already making enormous positive impact on our lives, and it has tons of potential power. Whether we like it or not, AI sorts our emails into category tabs, shows us ads it thinks we’ll click on, and recommends restaurants based on our location and history.

While it’s far from risk free, people are

AI has an inherent weakness in that we can infect it with our own biases, whether consciously or unconsciously. Bias can be in the definition of the problem as it’s submitted to the AI machine or the data that we enter to drive its decision-making. Take this example applicable to fintech: If data showed that the residents of a town often default on loans, AI could conclude all residents of that town are a credit risk. However, more detailed data would show this to be a case of correlation rather than causation.

Such risks are not merely theoretical. There have already been cases in which AI and machine-learning systems have verifiably discriminated against people because their problems were poorly specified or because they were trained using biased or incomplete data.

Here’s another real-world example: In 2016, the New York Supreme Court ruled in favor of teachers who claimed they had been given unfairly poor appraisals by their AI-based teacher-grading software. Among other issues,

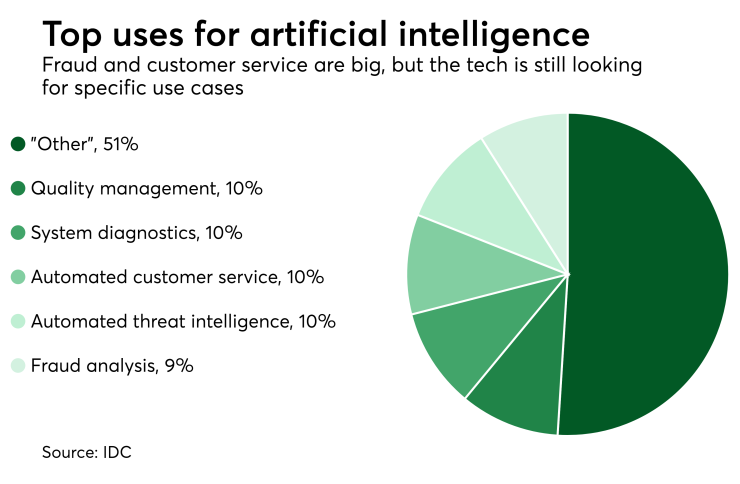

Fintech has already begun leveraging AI in some areas, most notably to address security, prevent fraud and screen applicants for loans and other financial services. AI is being used to learn patterns of both legitimate and fraudulent payments, build rules defining those patterns, and then apply the rules to identify suspicious transactions. The more transactions fed into fintech AI fraud detection machines, the rules become simultaneously broader (to identify more suspicious transactions) and more refined (to identify fewer false positives).

While it’s benefiting from AI, fintech is no more immune to these problems than any other sector. To go back to our earlier example, if banks fail to neutralize bias in the algorithms used to determine who receives access to loans, and on what terms, people will be treated with inequity. The consequences for those affected and for the finance industry could be severe.

The public trusts fintech to provide equitable, fair treatment to consumers, and we can say for the most part that members of the fintech industry also want to do so. AI can support or destroy that goal. So as an industry, we need to take the risks of poor AI design and algorithmic bias seriously.