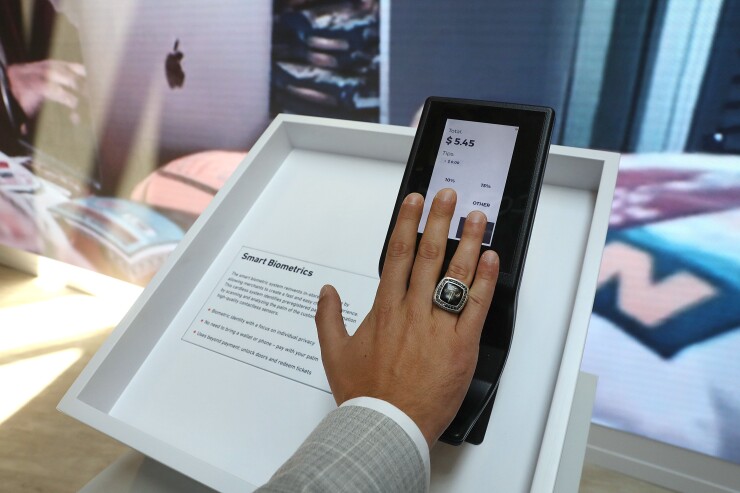

Technology improvements have made biometrics a highly convenient way for customers to authenticate their identities.

However, even as the use of biometrics by banks increases, there's a

Not surprisingly, examples of AI-generated deepfakes being used for criminal purposes abound. iProov, which provides biometric authentication, identity verification and digital onboarding services, reported a whopping 704% increase in face-swap attacks — a type of deepfake — between the first and second half of 2023. Broadly speaking, deepfakes refer to video, photography, or audio that seems real, but, in reality, has been manipulated by AI, often for criminal purposes.

A few months ago, London-based engineering company Arup confirmed an employee was duped into sending $25 million to fraudsters posing as the company's chief financial officer in a deepfake video conference call. And, in March, criminals reportedly impersonated a chief executive's voice to weasel $243,000 out of a U.K.-based energy company.

Here are four steps banks can take to shore up their defenses when using biometrics:

Vigilantly test the bank's systems on an ongoing basis

Defenses that may have worked for the bank in the past may be outdated in the wake of AI's proliferation.

"A bank's voice authentication system may have been 100% accurate until now given that the technology changed to make deepfakes more possible," said Vasant Dhar, an artificial intelligence researcher and professor of data science at the Leonard N. Stern School of Business at New York University. Now the systems are more vulnerable, banks need to be even more vigilant about testing, he said. This includes sending copious amounts of fake samples of voices and seeing how often one breaks through the bank's defenses, he said.

Link silos

Banks are good at recognizing credit card fraud, for example, but many fall short when it comes to linking different silos of the bank from a security perspective, said Zilvinas Bareisis, who leads the retail banking and payments practice at Celent. Ideally, banks should be able to monitor that a customer recently made a credit card transaction from somewhere in Europe and recognize that something is amiss, if moments later, the customer is trying to send money from his or her home state in the U.S. Banks need to be able to look at customer activity across multiple accounts and account types they hold with the institution, he said.

Join data-sharing networks

Even the largest bank will have limited visibility into a customer's behavior, so simply sharing the information in-house isn't enough, Bareisis said. For a broader understanding of customer behavior, they need to consider commercially available data-sharing networks, which aim to merge offline and online data in near real-time to detect abnormalities and prevent fraud.

"The idea of shared intelligence is really important as we try to fight fraud attacks, whether biometric or not," said Kimberly Sutherland, vice president of fraud and identity strategy at LexisNexis Risk Solutions, which offers a product that leverages data from about 3 billion monthly transactions. "Banks are trying to get as many passive risk signals without impacting the customer's experience," Sutherland said.

Understand the limitations

There's no perfect solution and there are going to be vulnerabilities, Dhar said. It's a balancing act between finding the most secure solutions without sacrificing too much convenience for customers. "You want to put as little friction as possible in the process and still be secure," he said.

Sometimes, however, more safeguards are necessary to prevent bigger issues. For example, users can often make transactions on their mobile phones after authenticating their fingerprint. But for large transactions, banks should consider additional layers of protection to verify the user's identity, Bareisis said. And if a bank is onboarding a new customer by asking the person to take a selfie and submit it along with a driver's license, it would behoove the bank to insist on a live video call as well, as an added layer of security, he said.

Behavioral analytics can also help authenticate transactions initiated using biometrics, Bareisis said. Does the user's IP address support who they say they are, for example, and is the time stamp on the transaction consistent with when you would expect the person to be banking?

"Banks don't want to rely on biometrics alone," Telang said.