With recent calls for a ban on all uses of facial recognition systems — even uses that seem benign, such as to give customers a more convenient way to log in to mobile banking — banks' use of the technology might have to be rethought.

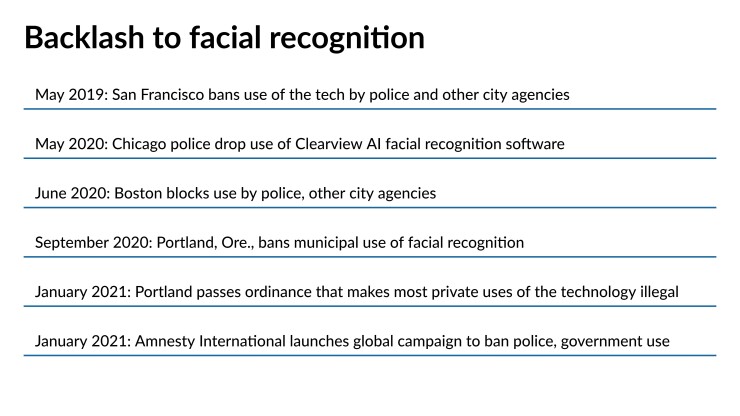

Facial recognition tech tries to match an image of a person’s face captured through a phone, video camera or other device with images in a database. Several cities, including San Francisco, Boston, Chicago and Portland, Ore., have banned the use of the technology among police and government agencies. The most recent wave of objections to the technology stem from police arrests of partcipants in Black Lives Matter protests.

But private companies like banks are not immune to consumer activists' ire at misuse of this technology, and groups like Fight for the Future have called for banks, too, to stop using it altogether.

The overriding fear about banks’ use of facial recognition is that it could result in people getting cut off from normal access to banking services. They might be denied loans or other services or charged higher rates because of, say, the color of their skin or their sex.

“Part of the concern of facial recognition in general, and specifically in banks, is that it augments existing bias in our society,” said Caitlin Seeley George, campaign director at Fight for the Future, a Boston-based nonprofit group that advocates for digital rights. “Banks have huge impacts on people's lives, on whether or not they can get a loan to buy a home or start a business or get the financial support they need. And in challenging times like we're having right now, banks have huge value in people's lives. And if they're using facial recognition, they can use that to discriminate against people for a whole host of reasons.”

Activists have also raised concerns about the risk of errors. A bank could compare potential customers or employees’ facial images against publicly available mugshot databases and mistreat people who are misidentified.

Face recognition logins

The main way banks use facial recognition today is to let people log in to their mobile banking apps without having to enter a username and password. USAA was the first to do this: It began piloting and testing biometric authentication in 2014 and rolled it out to all members in 2015. In 2017 Apple released FaceID; other manufacturers followed.

USAA does not store raw biometric data, such as faceprints, as part of its authentication and security processes.

“Use of these types of biometric authentication methods includes processing of biometric templates, which is an irreversible mathematical representation of an individual’s identity,” said Mike Slaugh, executive director of fraud protection at USAA. Therefore, it doesn’t have faceprints to match against a criminal database, Slaugh said.

Customers' faceprints are never tagged, and they are never shared with others such as police and retailers, Slaugh said.

“We are committed to protecting the privacy of our members,” he said.

Many banks, including Wells Fargo and U.S. Bank, similarly let customers authenticate themselves for mobile banking using the facial recognition on their mobile device, without having to enter their username or password. Customer biometrics are stored on their devices, and the banks do not have access to them.

This feels like a safe use of the technology. After all, customers choose this method of logging in for its convenience, and the faceprint is stored on the customer’s device. Some opponents of facial recognition make an exception for this use case. For instance, the Electronic Frontier Foundation does not support banning private use of facial recognition.

“Instead of a prohibition on private use, we support strict laws to ensure that each of us is empowered to choose if and by whom our faceprints may be collected,”

Portland, Ore., which passed an ordinance in January banning the use of facial recognition by private businesses in places of public accommodation, exempts the use of this technology for "user-verification purposes" on an individual's personal or employer-issued "communication and electronic devices."

But some advocates, including Fight for the Future, still object to the use of faceprints for banking logins.

“We are concerned with how these casual use cases are making people more comfortable with the technology without really educating them about the concerns and dangers,” George said. “Such casual facial recognition is really problematic because it normalizes its use across society. And especially in these cases where there’s opt-in consent, we think it's really problematic because in order to have meaningful consent to do something, you need to understand the potential harms. With facial recognition, people don't.”

George also worries that face scans could be stored in databases that could be hacked.

“Unlike our credit cards, when those databases are hacked and stolen from, you can't replace your face,” George said. “So there is an inherent harm to opting into those use cases.”

Colin Whitmore, senior analyst at Aite Group, warned that new customers’ images could potentially be compared against a criminal or sanctions database in an attempt to weed out people with a criminal past. Such efforts are full of pitfalls, he said.

“There’s a growing objection to the use of facial recognition verifying identity and checking if somebody's got a criminal record, because the systems can be so wrong,” said Steve Hunt, senior analyst at Aite. “And it does seem like people in minority groups are more likely to be misidentified.” This is why banks are cautious with the technology, he said.

Surveillance of branch visitors

Some banks are experimenting with the use of facial recognition in branches, so that customers are recognized as they walk in and employees are fed vital details about them in the interest of improving service.

If such technology were used to kick out people who have a criminal or terrorist record, this could be an issue, again because facial recognition is often flawed, especially in its applications involving minority groups.

“That technology has been available for 15 years now,” Hunt said. “There are banks dabbling in it and many customers would like it if a personal banker walked out and greeted them by name.”

Some customers would consider being greeted by a banker who had just been given the latest information about their account creepy, Whitmore added.

Other banks, including JPMorgan Chase, are experimenting with video analytics in the branches. According to a recent

Hunt points out that video analytics technology is not the same thing as facial recognition. It detects motion, but it is not typically used to identify people.

“That sort of thing is being used in branches and is very reasonable,” Hunt said. USAA does not use facial recognition in or around branches. Other banks contacted for this article did not comment on their use of the technology in branches.

Surveillance of ATM vestibules

Some banks are using facial recognition to target the homeless, the Reuters article said. The story quoted an anonymous security executive at a midsize Southern bank saying it’s using video analytics software that “monitors for loitering, such as the recurring issue of people setting up tents under the overhang for drive-through ATMs. Security staff at a control center can play an audio recording politely asking those people to leave," the executive said.

Whitmore and Hunt reiterated that video analytics technology generally doesn’t involve facial recognition.

“It’s just a camera with an activity sensor,” Whitmore said.

If facial recognition were to be integrated into such a system, so that homeless people were being identified and tagged, that would raise privacy issues.

“The concern is definitely more about the AI that can be used and that can tag someone who is a homeless person as homeless and assign them that identity that could then potentially be used against them in different situations,” George said. “You could also imagine a case where that information could be shared with police, or it could be shared with other retailers. People could be marked as homeless and targeted and discriminated against in other situations.”

In her view, banks’ money would be better spent trying to actually help people who are homeless, for instance by assisting with funding housing options.

“We know that surveillance technology is most often directed toward homeless people, people of color and low-income communities,” she said. “And there's this tendency to police marginalized people in our society in a way that is biased and problematic and leads to greater arrests of these people unnecessarily and puts them in danger.”

Another danger of facial recognition at an ATM or in a branch is the issue of misidentification. There are

If legitimate customers get repeatedly rejected at the ATM, they might justifiably see that as racial bias, Whitmore observed.

Employee surveillance

The use of facial recognition on people applying for jobs at, or working in, banks is also controversial.

“This is another case where you can't get meaningful consent to be tracked by facial recognition,” George said. “If someone needs a job, they might not be able to ‘opt out’ and quit if their workplace starts using facial recognition. It's not fair to ask people to pick between their job and their privacy.”

Whitmore said that if a person applying for a job provided a faceprint, a bank could scan social media, find loads of pictures of that person in a bar and decide “we don't think you're the right type of employee to come and work for us, or you've associated with these people,” he said.

Among banks contacted for this article, USAA said it does not use facial recognition in employee surveillance. Other banks declined to answer that question.

Fight for the Future takes the stance that people should not be under surveillance all the time.

“If you're under surveillance 40 hours a week at work, that's a pretty large chunk of your life,” George said. “We also know that workers being surveilled can then be targeted by management and be harassed.”

The group also generally opposes the idea of amalgamating massive amounts of data about people that could be handed over to law enforcement or border control to target and harm immigrant communities.