As banks roll out large language models to employees, to help them do things like

Yet large language models generate errors and hallucinations in a way that earlier forms of AI do not, and some experts say there's no way to completely mitigate these risks.

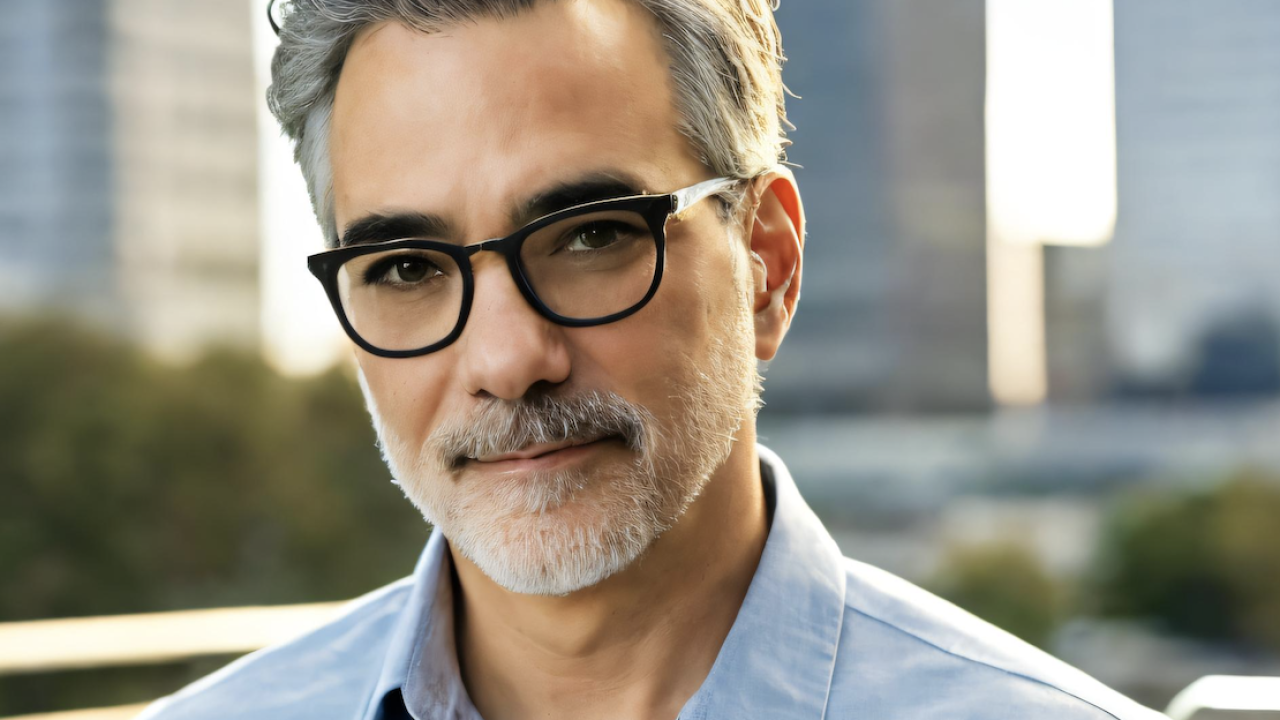

"Essentially these large language models are trained on the whole of the internet," said Seth Dobrin, former global chief AI officer at IBM, founder of advisory firm Qantm AI, and general partner at 1infinity Ventures, in a recent

Not everyone agrees.

"The belief that the larger models are, the more prone they are to hallucinations is not necessarily accurate," said Javier Rodriguez Soler, head of sustainability at BBVA, in an email interview. "In fact, it's typically the opposite: all things equal, larger models tend to hallucinate less due to their improved capacity to build a better representation of the world. For example, [OpenAI's] GPT-4 is estimated to hallucinate around 40% less than GPT-3.5 while being much larger. As AI technology progresses, we can expect new generations of models to further reduce hallucinations."

However, Soler admitted that hallucinations remain an unsolved challenge. To address this, he said, BBVA has set up a dedicated team to evaluate, test and implement safeguards for AI applications. And for critical applications, where even a single mistake is unacceptable, if large language models can't meet the bank's requirements, it will not use them.

Hallucination

The most widely recognized drawback of large language models is their tendency to hallucinate, or make stuff up. Most LLMs predict the next word (technically, the next token) in a sequence, based on the data on which they've been trained and a statistical calculation.

"AI hallucination is a phenomenon wherein a large language model … perceives patterns or objects that are nonexistent or imperceptible to human observers, creating outputs that are nonsensical or altogether inaccurate," IBM explains on its

Last year, shortly after Google launched its Bard large language model-based chatbot (it's since changed the name to Gemini), it answered a question about the James Webb Space Telescope with the "fact" that the telescope, which went live in December 2021, took the first pictures of an exoplanet outside our solar system. In reality, the first image of an exoplanet was taken by the European Southern Observatory's Very Large Telescope in 2004, according to NASA. Google said it would keep testing the model.

Bankers often say they use retrieval augmented generation, or RAG, to keep their generative AI deployments safe from hallucination. RAG is an AI framework that grounds models on specific sources of knowledge. For a bank, this might be its internal policy documents or codebase.

But banks may be hanging too much hope on this idea, according to Dobrin.

"With retrieval augmented generation, these models are still trained on the whole of the internet," Dobrin said. "They still use GPT-4, they still use Llama, they still use Claude from Anthropic, they still use Gemini. So they still have the hallucination problem. What they're doing is limiting the response to a particular set of data."

Studies have shown that "these models still hallucinate at a pretty high rate, a pretty unacceptable rate for an organization like a bank," Dobrin said. "That's not to say that these models don't have value in things like summarization and helping you write emails as long as humans are there checking and reworking and things like that. But you don't want these doing vital tasks. You don't want them fully automating information that's being used in critical customer interactions or critical decisions."

Small, task-specific AI models can get close to zero hallucination, he said.

Some vendors talk about offering hallucination-free LLMs. For instance, one talks about unifying databases into a knowledge graph to use with semantic parsing for grounding LLM outputs, thereby eliminating hallucinations.

Techniques like these could reduce the likelihood of hallucination to less than 10%, Dobrin said. "But it's not zero, it's not close to zero."

Dobrin, founder of advisory firm Qantm AI and former global chief AI officer at IBM, warns that popular generative AI models were trained on the whole of the internet and hallucinate at an unacceptable rate.

A related danger is that a large language model could pull a piece of wrong or outdated information from its training data and present it as fact.

For instance, a user who typed "cheese not sticking to pizza" into Google AI Overviews, a feature of Google Search powered by a Google Gemini large language model, was advised to add glue to the sauce.

In this case, Gemini apparently picked up on a joke a user made on Reddit 11 years ago.

Many industry observers see hallucination and error as problems but not dealbreakers.

"I think we haven't solved the hallucination problem," Ian Watson, head of risk research at Celent, said in an interview. "There are ways to reduce the instances of it."

For instance, one large language model could be set up to critique another LLM's work.

"That has been shown to massively reduce the amount of false information and hallucinations that come out of one AI model," Watson said. "That's probably going to be one of the most effective ways to govern AI output."

Safe for some use cases

Many experts see large language models as safe to use as long as there is a human in the loop.

"When people say it's never going to be error-free, I don't find that as troubling because I always think there's going to be some expectation that people are going to have to review the output, and that the use cases that are going to thrive are going to be ones where AI is an aid to something that people need to do, but it's not completely replacing the human element," said Carlton Greene, a partner at Crowell & Moring.

For instance, it could help draft suspicious activity reports, referred to as SARs, Greene said.

"One of the big burdens in the anti-money-laundering space is drafting SARs," he said. "There's a standardized format in terms of what needs to be in the narrative, and it's possible that AI could do a lot to help prepare those forms."

But humans do need to fact-check these reports, Greene warned.

"You could imagine all kinds of ways that things could go wrong if it were to hallucinate and make up suspicious activity about a bank customer that then resulted in an investigation, because those SARs get made available to law enforcement, so you could see all kinds of potential issues."

But with human review, the use of LLMs could save a lot of time for this rote task, he said.

Several experts interviewed for this article said all the risks of large language models are surmountable.

"What I'm seeing in nature is with RAG, with prompt engineering, with model refinements for specific industries within specific regulatory guardrails, you can get good results," Alenka Grealish, senior analyst at Celent, said in an interview. "You have to have humans in the loop. You have to have a closed loop system for when something does go awry, that it's visible, it's recognized and it's stopped and corrected."

Not using generative AI because of the risks is "like saying, well, there's always a chance that a car will run into a child, so I can't drive it," Bjorn Austraat, CEO and Founder of Kinetic Cognition, said in an interview. "But you can still just drive safely."